Artificial intelligence tools are used almost everywhere, from social media to police precincts and courts. Civics 101’s host Hannah McCarthy and Julia talk about how AI is governed today and if current laws are enough to protect our rights and privacy.

When we talk about AI, when we talk about the algorithm, machine learning, what are we actually talking about?

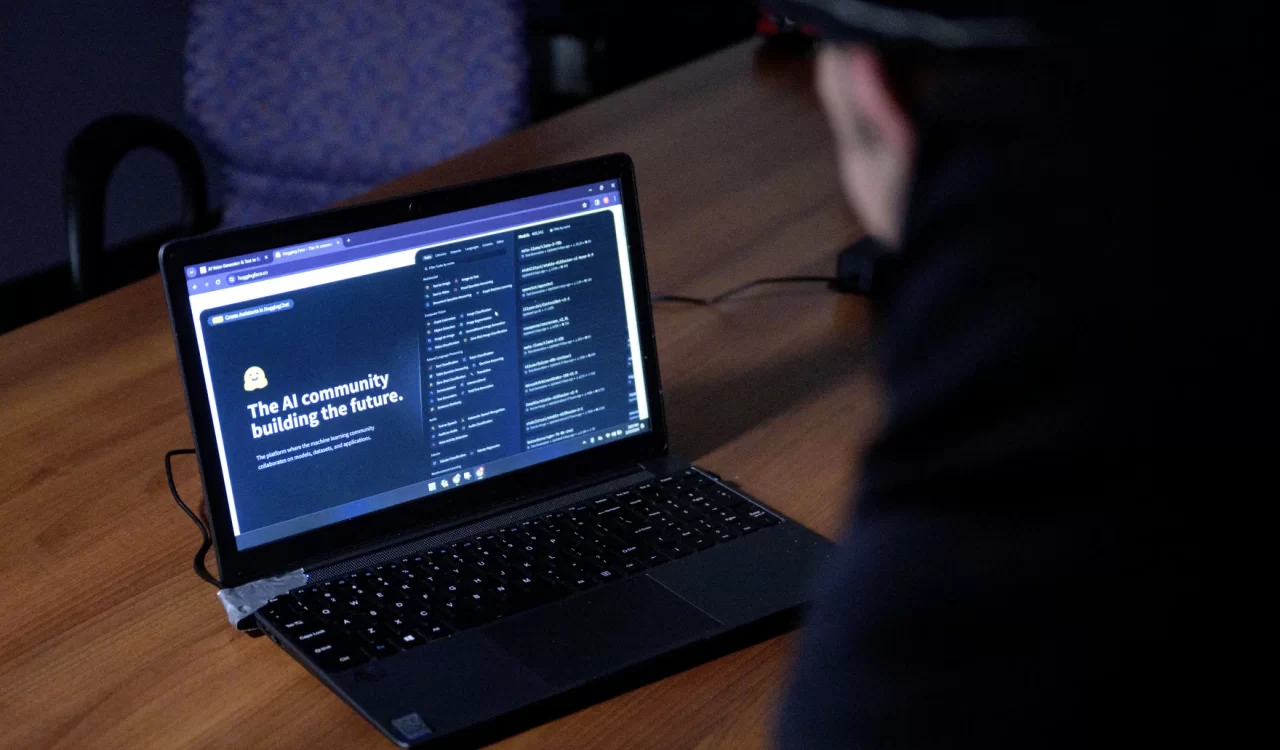

We are talking about a whole swath of technology. We’re going to call it AI for the purposes of this conversation, but machine learning is the term typically applied to the type of tech that city and state governments are using.

Most people get their day-to-day dose of AI via social media and using search engines like Google. An algorithm takes existing, available data about you and uses it to display content you might be interested in. It feeds you ads based on what you’ve searched for in the past. It is, very simply, a prediction tool. And that same type of technology is also being used by government offices to do a wide range of work for city, state and country populations.

Are AI tools currently governed by our laws?

So at the federal level, President Joe Biden signed an executive order that is designed to make AI safe, secure and trustworthy. And that same administration proposed an AI Bill of Rights. Now the executive order is enforceable. The [AI] Bill of Rights is not enforceable. It just suggests how AI might be governed going forward.

And while we have had dozens of House and Senate committee hearings on AI, laws that regulate AI are really slow to develop at the federal level. So in lieu of that, there are several states that have passed legislation to protect data privacy, prevent bias, increase transparency, all as their governments adopt AI technologies.

But the issue is that these technologies are developing at an incredible pace. The minute something new hits the market, that’s something that has to be questioned often through court cases. For example, there was a tool that was measuring basically the performance of teachers in a public school. One teacher who was assessed by this AI technology sued, essentially, and said, this is a violation of my rights. So it’s really piecemeal.

So I would say to answer your question, are there aspects of AI that are not able to be governed by our current laws? Plenty of them, because our current laws are often not specific enough to actually govern that AI.

How should U.S. courts and lawmakers think about governing AI tools? Is it possible for them?

It’s definitely possible. This is about protecting rights and protecting privacy. That is something that we do in the United States. We have always developed laws as technology develops.

But again, the U.S. is looking to its states. It’s looking to other nations. And I want to say most recently, the EU passed the AI Act to regulate machine learning technology. And it does outright ban all kinds of exploitative and intrusive tech like deepfake stuff, categorizing people based on certain biometric data, and, I kid you not Julia, emotion-inferring AI in the workplace and education is also banned.

And then this same act defines high risk environments for AI tech and attempts to regulate how it’s going to be used there. So that includes health care, law enforcement, criminal justice. So thinking about, as these technologies are being developed, what’s going to govern them as they come out? It’s sort of trying to get ahead of the curve there. And I expect other nations, especially the U.S., to watch the rollout of this act and see what kind of impact it’s going to have and whether or not we are essentially going to copy it.